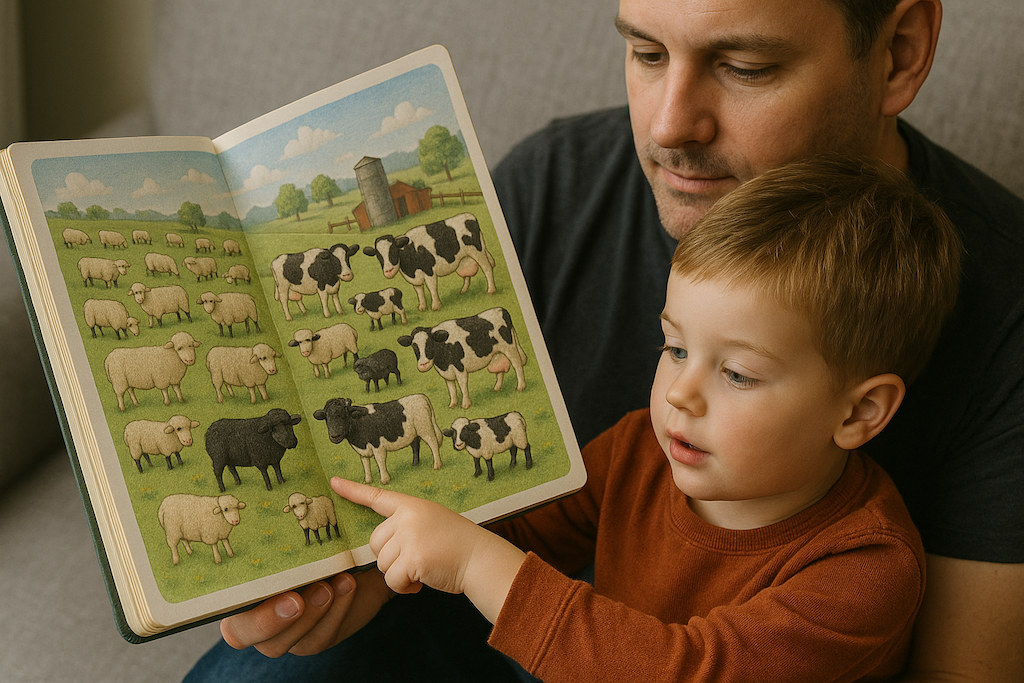

Children learn recognizing animals, their properties and counting early on, along with spelling words and humming animal sounds. It is challenging at the beginning. They play and learn the names of animals, their shapes, their edges, the sounds they make, and what they do for a living. Their parents challenge them with questions like “Where is the cow?”, “Whose sound is that?”, “How many chicks are there?”, “Where are the black sheep?”, and the children have to connect all those concepts through language, vision, sound, among others. Eventually, they get very good at it and make their parents happy.

Vision-Language Models (VLMs), offspring of the Generative AI era, have amazed us with their ability to answer visual questions, generate images, and even navigate environments. Yet, behind these impressive demos, there are still critical flaws and limitations in scene understanding and reasoning. Most studies so far have focused on simplistic scores, scraped images along with their erratic captions, making it hard to interpret what’s really wrong.

This lack of interpretability is not only a concern for developers and end-users, who need to know when these systems fail, but also for researchers, who must understand why VLMs exhibit these limitations and how to overcome them.

In our latest research, we have taken on the challenge of assessing the multimodal understanding of VLM and their ability to perform cognitive (as opposed to low-level processing) tasks. As a starter, we have explored the reasoning abilities of VLMs in counting, a cognitive task relevant across domains, such as medicine, biology, agriculture, and manufacturing, where actual performance matters.

Our findings show that counting remains a significant challenge for the current state-of-the-art models. We also observed that their performance is highly sensitive to confounding factors and scene variability. We looked under the hood of these deep neural networks and, through a layer-wise analysis, we uncovered how different sub-networks of the model contribute to reasoning. This investigation enabled us to re-think the training, the evaluation methodology and proposed network architectures that have substantially increased performance with minimal computational resources. We still have a long way to go, and generative models may not be the right fit for the farm book challenge!

Check out our papers:

https://arxiv.org/pdf/2510.19555, [De|Re]constructing VLMs' Reasoning in Counting,

https://arxiv.org/pdf/2506.05146 CIVET: Systematic Evaluation of Understanding in VLMs [EMNLP 2025]

#AI #GenerativeAI #Counting #ComputerVision #NLP #Innovation